Wrap-Up

Paradox Puzzlehunt is officially over! Solutions to each puzzle have been posted, and the leaderboard has been frozen. Solution pages also include author’s notes to give some insight into the writing process of each puzzle. Of course, feel free to continue solving puzzles at your own pace if you’d like to. And if you participated in this hunt at all, please fill out our feedback form!

This wrap-up contains spoilers for the whole hunt!

Thank you so much to everyone who participated! We were excited to hear lots of enthusiastic feedback from the teams who’ve finished the hunt. We weren’t sure how large this event would be, and the scale blew us away. We had 352 teams registered, of which 208 solved at least one puzzle. 130 teams solved the first meta, and 126 solved the final meta. Our favorite part of these stats is that around 60% of teams that solved at least 1 puzzle went on to finish the hunt! We’re glad our format allowed many teams to finish the hunt without intense time pressure.

This wrap-up will pull back the curtain a bit to give you some insight into our hunt planning process and our future plans. We hope this information will be helpful to people in similar situations to us who are interested in running their own hunts.

Jump to:

Writing Process

We’ve been doing puzzlehunts for a couple years, and we really enjoy them. We feel like the hunt community could always benefit from the existence of more hunts, so we decided to try writing one ourselves.

We took a huge amount of inspiration from two main sources: Puzzlepalooza and Puzzle Potluck. These were some of the first hunts we participated in, and they remain among our favorites. Both of these hunts give you all the feeder puzzles as soon as the hunt starts, which we as solvers enjoy. It helps avoid bottlenecks and lets solvers skip puzzles they don’t find fun. We also really like Puzzle Potluck’s guilt-free hint structure, so we decided to model our hints after theirs. We knew from the onset that we wanted to run a smaller-scale hunt, one that would be solvable in a day by highly experienced teams.

This hunt was born when Ben decided to write a puzzle with SEVEN NATION ARMY as the answer. The desire to include this answer eventually led to the CENTER WORD OF TEN SNAPPLE CAP FACTS metapuzzle. From there, we decided on a Soda Fountain of Youth theme.

We think the theme of youth is fitting since we started working on this hunt pretty much exactly when we started college, beginning our transition into adulthood. We also really like the soda fountain / fountain of youth pun. The idea of a soft drink theme is somewhat absurd, but we like that it’s out of the box.

With the constraints of the meta set, we next came up with the set of 10 feeder answers we wanted to use. In most cases, we came up with our puzzle ideas based on the answer we needed instead of the other way around. For example, FOOL AROUND lent itself perfectly to a wheel of fortune tarot card puzzle. For most of the writing process, we referred to puzzles by their answers rather than their titles. We think this answer-first writing process was largely successful.

Most of the writing process consisted of the two of us throwing puzzle ideas at each other and seeing what stuck. We had a very basic outline of the theme/style we wanted for most puzzles just based on the answer, but coming up with specific mechanics to realize the theme proved difficult. We spent many a night discussing random puzzle ideas, most of which went nowhere. When we finally stumbled upon a promising mechanic, the actual writing process was usually relatively quick. The notable exception is A Crossover - we knew more or less exactly what the mechanic would be, but constructing the grid took a long time.

We don’t have a large network of puzzle-loving friends, so finding people to testsolve was somewhat difficult. Luckily, we did manage to find a couple teams to do full-hunt tests. Our testsolving process was not very organized - we basically just played it by ear and asked people to test as soon as we were happy with all the puzzles.

The final release date of the hunt was similarly played by ear - we guesstimated that we’d have everything done by early May, since we’d both have a bit of time off school to focus exclusively on the hunt. Fortunately, the timing worked out and we got everything ready in time - even if we were scrambling to get some of the website and logistics finished at the end.

Web Platform (written by Ian Rackow)

This was my first time trying to run the web infrastructure for a puzzlehunt, and I was pleasantly surprised that we managed to run as well as we did! While I had no previous experience with puzzle specific platforms, I was very familiar with a platform called CTFd, used to host a kind of cyber-security contest called Capture the Flag which has a very similar competition structure (individual challenges with a unique string as an answer). CTFd is extremely simple to set up, deploy, and make simple HTML pages with, so for a one-man web infrastructure team I decided to go with it instead of something less familiar but more tailored to a hunt like the Galactic Puzzlehunt site, which I would try to use if I were to run tech for a hunt again and had more help. There were certainly some benefits to this approach, but our platform probably ended up being more confusing and less functional than we would have liked.

Benefits:

- My prior familiarity, and no need to alter anything at all from the backend code

- Page creation and HTML editor all with a very user-friendly interface (accessible for people not too familiar with web development)

- Good scoreboard with pretty graph

- Built in notifications for errata/updates

Drawbacks:

- No ability to nudge people with close answers and no guess log (typos, missing a word, “keep going!”s). This was bad for “Tidal” where we could have more directly instructed people to “make and email us a meme”, but luckily people managed to figure that out

- Hard to customize scoring besides setting point value (i.e. we couldn’t keep first-solve on the Meta as a tiebreaker on the scoreboard page).

- Answer submission required to use the “Challenges” page, instead of being on the same page as the puzzle

- Too much extra clicking around between pages required, added confusion

- Issues getting the email server integration to work, so password resets had to be manual

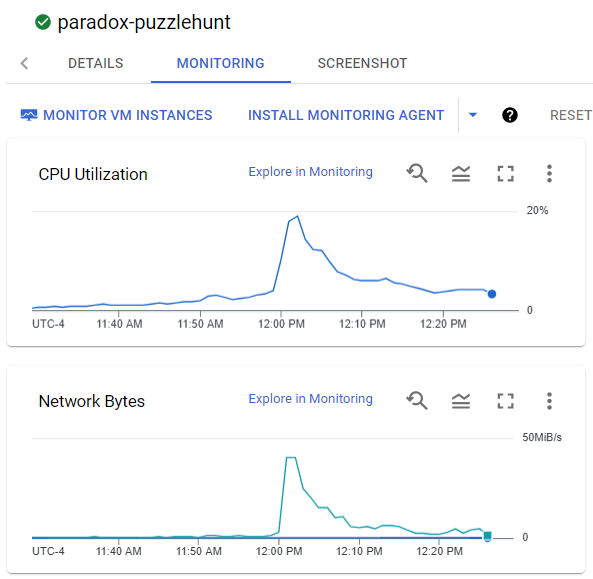

To host the site, I used a free trial of Google Compute Engine and it was more than enough capacity for the load we experienced. CTFd comes with a pre-made docker-compose configuration, which I only had to modify to add SSL certs with nginx and increase the number of gunicorn service workers. The trial for Google Cloud Platform comes with $300 dollars in free credits which was more than enough for a very nice and overkill Compute Engine instance (16 vCPUs, 60 GB of memory) for the week, we only ended up using about $50 dollars of those credits and could have been fine on ~$10 if I downgraded the server after the first hour rush. Naturally, traffic spiked and hit its maximum right at 12:00 EDT on Saturday when the hunt opened, but we were well still well below load capacity:

We did have to restart the server after some unknown error knocked out the answer submission page around 1:00 EDT and again at 2:45 EDT on the first day, but after that things were quite smooth. I ended up adding a cronjob to restart the server every so often starting Saturday night just so I didn’t need to monitor as actively in case things went down after that (sorry if 502 errors popped up for a few seconds here and there, this was likely due to one of those automated restarts). Most importantly, the core functionality held up with relatively little maintenance work needed on the straight off the shelf CTFd release. Feel free to reach out if you want any more details about the setup, CTFd with a little bit more backend modification could end up being a good option for new hunts that need a simple option like I did.

Takeaways and Lessons

We’re proud of how the hunt went overall, but there are definitely some things we could do better next time. We think the three biggest areas for improvement, excluding web issues, are when we write the meta, how we test, and keeping in mind solvers from different backgrounds than our own.

We definitely should have written and finalized our meta in its entirety before working on feeder puzzles. The structure of our final meta wasn’t set in stone until just before the hunt began. The metas in the final product weren’t awful, but they were less polished than we would have liked. And they could have easily ended up much worse due to our poor planning - there was only one snapple cap with a center Q, meaning with a bit worse luck our cluephrase would’ve been impossible and we’d have only realized halfway through writing the hunt.

There was also a lot of room for improvement in how we organized our testsolving. We should have figured out who we would get to test well in advance, we should have tested individual puzzles in an organized way, and we should have scheduled our tests well in advance of the hunt launch. Most importantly, we should have thoroughly tested the metas before we started writing and testing individual puzzles.

Finally, we should have realized that not every solver comes from the same background as us. The hunt included several references to the U.S. that proved confusing for international solvers, as well as references to meme culture that confused older solvers. We honestly didn’t expect to reach such a multifaceted audience, so we’ll definitely keep these factors in mind for the future. We'd also like to know about any other areas people struggled with due to their background - if you or a teammate had extra difficulty with a certain part of the hunt, please send us an email and let us know!

Future

There will be a Paradox Puzzlehunt 2! Eventually. We were thrilled by the positive reception to this hunt, and we had a ton of fun writing and running it, so we see no reason to stop. We’d like to expand our team before the next hunt - a core team of two people feels a bit small at times. We already have a few ideas for things we’d like to include in the next hunt, but we have no clue when that next hunt will be.

Credits

- Creative Leads: Ben Coukos-Wiley, Jonah Nan

- Puzzle Writers: Ben Coukos-Wiley, Jonah Nan, Kevin Zhou

- Website: Ian Rackow, Ben Coukos-Wiley, Amber Gong

- Art (Soda Fountain and Wheel of Fortune): Amber Gong

- Logo Design: Logan Oplinger

- Memes and Misc. Image Editing: Ben Coukos-Wiley

- Testsolvers: inteGIRLS, Alex Grosman, Jade Palosky, Gosley Brodkin, Iris Sun, Julia Gelfond, Patrick, Rachel, and Bryan

Submission Hall of Fame

| Puzzle | Submission | Team | Award | Notes |

|---|---|---|---|---|

| Tidal | ELONMOLLUSK | Mobius Strippers | Funniest | We wrote a puzzlehunt for our high school including a character named Eelon Musk. This brings back memories. |

| One Small Step | CORRECTANSWER | Busy Bees With Lots of Cheese | Most Audacious | |

| Wheel of Fortune | ICARUS | Show Off That Cow | Most Creative | This was submitted right after FOOL AND SUN, so we assume it's a very clever reuse of mechanics |

| The Fountain | COTTONWOOD OF TELECOMMUNICATIONS | that's not green, it's sand | Most Absurd | The enumeration and a few letters are the same, but we can't say this would've been a good meta answer |

| Tidal | like summer marmalade | The Mysterious Benedict Society | Best Random Anagram | This is a mostly correct anagram of the letters extracted from Tidal, but is a rather whimsical answer |

| Transfiguration | MARIETTA EDGECOMBE | At least 50 teams | Worst Red Herring | We also saw CHO CHANG, RITA SKEETER, SIRIUS BLACK, SEVERUS SNAPE, XENOPHILIUS LOVEGOOD, and MUNDUNGUS FLETCHER |

| Stars and Stripes | I Will Always Think About You | Yuki | Most Valid | "I Will Always Think About You" is a song by the New Colony Six, a band that unintentionally fits perfectly into the final cluephrase of Stars and Stripes |

| Stars and Stripes | INDIVISIBLE | Belmonsters | Most Insightful | "INDIVISIBLE" was actually the working title for Stars and Stripes, so we were surprised to see it turn up as an answer |

| Tidal | MAKEASURREALUSAMEME | ⌊π⌋ | Victim of Spacing | "MAKE A SURREAL USA MEME" perhaps should have been the working title of Stars and Stripes |

| 4 different puzzles | poison | 5 different teams | Common Theme | We understand why this was submitted for Truth Table, but we have no idea why it kept cropping up elsewhere |

| Truth Table | bigbean | Exquisite Crap of r/PictureGame | Dynamic Duo | This never failed to make us laugh |

| Playtime | large evergreen | Pluru | Dynamic Duo | And neither did this |

| The Fountain: Part 2 | A CUP OF SODA HAS MORE CALORIES IN A QUARANTINE | Ceci n'est pas une puzzlehunt team | Most Relatable | It certainly does feel that way, doesn't it? |

| A Crossover | BALLS | Non-Explainable Statement | Most Inexplicable | We came up with the award title separately, but it's a little spooky their team name matches |

| The Fountain: Part 2 | water fountain | 4 different teams | Better Than the Real Answer | The teams were: Non-Explainable Statement, Estranged Cousin of /r/PictureGame, The Inedibles, and moreaboutvikings |

| One Small Step | chromatic abberation | 14 separate teams | Biggest RIP | Ben made this same typo while writing the puzzle. Multiple times. |

Best of The Memes

Submitted with the caption "When you make Ande mail US a meme"

Submitted with the caption "When you host your hunt on CTFd"

We'd also like to give a shoutout to the teams that had the audacity to put their own meme as their favorite from Tidal on the feedback form: Stumped and Furious, Jeff's Jaboticabas, and This Sentence Bears Inaccuracy